It has just arrived!

Link

Showing posts with label heterogeneous computing. Show all posts

Showing posts with label heterogeneous computing. Show all posts

Thursday, June 4, 2015

Sunday, October 5, 2014

Least required GPU parallelism for kernel executions

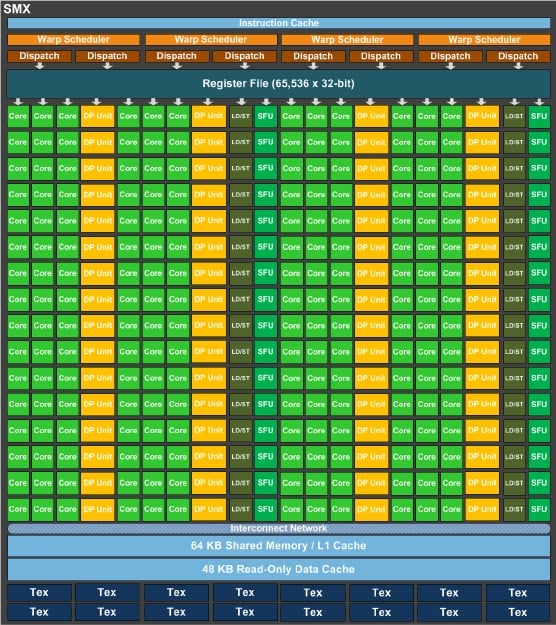

GPUs require a vast number of threads per kernel invocation in order to utilize all execution units. As a first thought one should spawn at least the same number of threads as the number of available shader units (or CUDA cores or Processor Elements). However, this is not enough. The type of scheduling should be taken into account. Scheduling in Compute Units is done by multiple schedulers which in effect restricts the group of shader units in which a thread can execute. For instance the Fermi SMs consist of 32 shader units but at least 64 threads are required because 2 schedulers are evident in which the first can schedule threads only on the first group of 16 shader units and the other on the rest group. Thus a greater number of threads is required. What about the rest GPUs? What is the minimum threading required in order to enable all shader units? The answer lies on schedulers of compute units for each GPU architecture.

NVidia Fermi GPUs

Each SM (Compute Unit) consists of 2 schedulers. Each scheduler handles 32 threads (WARP size), thus 2x32=64 threads are the minimum required per SM. For instance a GTX480 with 15 CUs requires at least 960 active threads.

NVidia Kepler GPUs

Each SM (Compute Unit) consists of 4 schedulers. Each scheduler handles 32 threads (WARP size), thus 4x32=128 threads are the minimum requirement per SM. A GTX660 with 8 CUs requires at least 1024 active threads.

In addition, more independent instructions are required in the instruction stream (instruction level parallelism) in order to utilize the extra 64 shaders of each CU (192 in total).

NVidia Maxwell GPUs

Same as Kepler. A GTX660 with 8 CUs requires at least 1024 active threads. A GTX980 with 16 CUs requires 2048 active threads.

The requirement for instruction independency does not apply here (only 128 threads per CU).

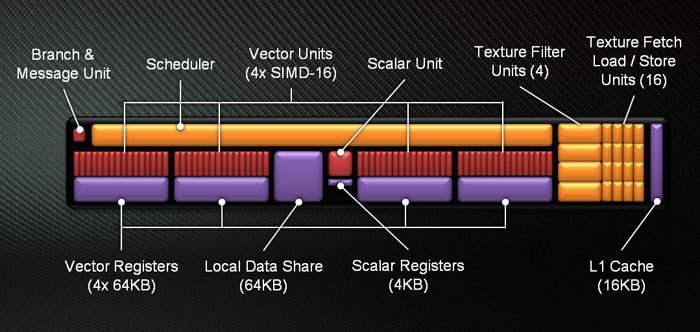

AMD GCN GPUs

Regarding the AMD GCN units the requirement is more evident. This is because each scheduler handles threads in four groups, one for each SIMD unit. This is like having 4 schedulers per CU. Furthermore the unit of thread execution is done per 64 threads instead of 32. Therefore each CU requires the least of 4x64=256 threads. For instance a R9-280X with 32 CUs require a vast amount of 8192 threads! This fact justifies the reason for which in many research papers the AMD GPUs fail to stand against NVidia GPUs for small problem sizes where the amount of active threads is not enough.

Tuesday, January 28, 2014

Benchmarking the capabilities of your OpenCL device with clpeak, etc.

In case you're interested in benchmarking the performance of your GPU/CPU with OpenCL you could try a simple program named clpeak. It's hosted on github: https://github.com/krrishnarraj/clpeak

For instance here is the output on the A4-1450 APU.

P.S.

1) Some performance measures of the recently released Kaveri APU are provided on Anandtech:

http://www.anandtech.com/show/7711/floating-point-peak-performance-of-kaveri-and-other-recent-amd-and-intel-chips

2) If you are interested you can find the presentation of the Kaveri on Tech-Day in PDF format here:

http://www.pcmhz.com/media/2014/01-ianuarie/14/amd/AMD-Tech-Day-Kaveri.pdf

3) The Alpha 2 of Ubuntu 14.04 seems to resolve the shutdown problem of the Temash laptop (Acer Aspire v5 122p). It must be due to the 3.13 kernel update. So, I'm looking forward to the final Ubuntu 14.04 release.

For instance here is the output on the A4-1450 APU.

Platform: AMD Accelerated Parallel Processing

Device: Kalindi

Driver version : 1214.3 (VM) (Linux x64)

Compute units : 2

Global memory bandwidth (GBPS)

float : 6.60

float2 : 6.71

float4 : 6.45

float8 : 3.51

float16 : 1.83

Single-precision compute (GFLOPS)

float : 100.63

float2 : 101.26

float4 : 100.94

float8 : 100.32

float16 : 99.08

Double-precision compute (GFLOPS)

double : 6.35

double2 : 6.37

double4 : 6.36

double8 : 6.34

double16 : 6.32

Integer compute (GIOPS)

int : 20.33

int2 : 20.39

int4 : 20.36

int8 : 20.33

int16 : 20.32

Transfer bandwidth (GBPS)

enqueueWriteBuffer : 1.80

enqueueReadBuffer : 1.98

enqueueMapBuffer(for read) : 84.42

memcpy from mapped ptr : 1.81

enqueueUnmap(after write) : 54.32

memcpy to mapped ptr : 1.87

Kernel launch latency : 138.08 us

Device: AMD A6-1450 APU with Radeon(TM) HD Graphics

Driver version : 1214.3 (sse2,avx) (Linux x64)

Compute units : 4

Global memory bandwidth (GBPS)

float : 1.97

float2 : 2.51

float4 : 1.95

float8 : 2.79

float16 : 3.54

Single-precision compute (GFLOPS)

float : 1.30

float2 : 2.50

float4 : 5.01

float8 : 9.21

float16 : 1.07

Double-precision compute (GFLOPS)

double : 0.62

double2 : 1.35

double4 : 2.56

double8 : 6.27

double16 : 2.44

Integer compute (GIOPS)

int : 1.60

int2 : 1.22

int4 : 4.70

int8 : 8.08

int16 : 7.91

Transfer bandwidth (GBPS)

enqueueWriteBuffer : 2.67

enqueueReadBuffer : 2.03

enqueueMapBuffer(for read) : 13489.22

memcpy from mapped ptr : 2.02

enqueueUnmap(after write) : 26446.84

memcpy to mapped ptr : 2.03

Kernel launch latency : 32.74 us

P.S.

1) Some performance measures of the recently released Kaveri APU are provided on Anandtech:

http://www.anandtech.com/show/7711/floating-point-peak-performance-of-kaveri-and-other-recent-amd-and-intel-chips

2) If you are interested you can find the presentation of the Kaveri on Tech-Day in PDF format here:

http://www.pcmhz.com/media/2014/01-ianuarie/14/amd/AMD-Tech-Day-Kaveri.pdf

3) The Alpha 2 of Ubuntu 14.04 seems to resolve the shutdown problem of the Temash laptop (Acer Aspire v5 122p). It must be due to the 3.13 kernel update. So, I'm looking forward to the final Ubuntu 14.04 release.

Saturday, December 14, 2013

A silly(?) prediction of a future CPU

First, let me warn you that what follows is not based on any recent scientific discovery but just on my imagination instead. As nowdays more and more computational units are fused within the CPU package the following picture could be some sort of a picture of a future chip.

As nowdays the CPU already incorporates GPU elements the following is a (rediculous) projection of a future central processing unit. It could contain a variety of combined cores for instance:

2) Massively parallel cores (just like GPU compute units)

3) FPGA cores (for even more specialized tasks)

4) Quantum cores (???!! for NP-complete search problems?)

The last element is certainly not possible to be produced with current technology but in the future... who knows. It might turns to be just another component of the CPU package. How would it be called? QAPU?

Subscribe to:

Posts (Atom)